SOME STORIES IN THE HISTORY OF MEDICINE

Lecture 1: Introduction and the Discovery of Germs

Adam Blatner, M.D.

Also, three supplements, which you can click on to link to related webpages:

A. A very brief overview of the history of medicine. (pre-history to the Renaissance) (just to get you oriented)

B. Further Overview to modern times.

C. A further history of microscopy.

Then: (To be posted as I prepare and give these lectures: )

2: Contagion, Infection, Antisepsis ; 3: The Early History of Immunology ; 4: The Discovery of Anesthesia;

5: Recognizing Nutritional Deficiencies ; 6: Hygiene: Sanitation, Hookworm, Dental Floss, & Summary

(First Posted around January 27, 2009; revised and re-posted, February 8, 2009)

My background is that of a physician, an M.D., and indeed, I was one of those kids who wanted to be a doctor from an early age. I had been sickly as a child and doctors were heroes. I enjoyed even then reading about the history of medicine, and it has been a kind of spirituality for me, a way of being connected with the desire to help make this a better world and other kinds of idealism.

In my mind, what it means to be a professional is that one works to advance the depth of knowledge and wisdom within that field. There’s a phrase in Latin: “Ars longa, vita breva”---the art (of medicine) is long, life is short. This means that however brief is a single lifetime in which

Today we’re going to begin a series of lectures that focuses on only a few of the many, many stories in medicine’s history, just some high points that I thought you’d enjoy. Future lectures in this series also will be posted on this website (see links above). I’m open also to your emailing me!

On another webpage is a quick overview, from the pre-historic times through the Egyptian, Greek and Roman civilizations, as well as elsewhere. Things picked up beginning in around the 1400s CE as we approach what later historians came to call the Renaissance—a word meaning “re-birth,” because other forms of knowledge from ancient times and the Islamic world—i.e., knowledge that didn’t come through the monasteries—became more recognized; and the first principles of science were articulated, dealing with questioning authority and experimenting.

For thousands of years people have sought to help in healing, and this process involved not just treatment, and more accurate diagnosis, but the word, diagnosis, means gnosis–knowing, and dia, as in diaphanous, semi-trasparent, see-through— means understanding. It's not just a matter of slapping a clever-sounding label on something. To understand involves in turn a continuous process of research, which in turn implies various forms of inductive and deductive reasoning, theory building, and experimentation. This last, the practice of experiment, became a mainstream with the emergence of science as a way of dealing with knowledge, instead of blindly accepting authority. I’ll note here that this new way of thinking by no means has become established, and traditional modes of learning still prevail in many fields and regions.

Consider, for example, the emerging field of anatomy. It became conceivable at least to a few daring people to think that what was in the books that had guided the world for what seemed like forever—maybe a thousand years or more—might be (gulp) mistaken! Anatomy involves what is inside, and that requires cutting open the human body to look—which was not just kinda icky, but religiously taboo. So it wasn’t done. People speculated. Some people back in the Roman era dissected animals and just guessed that humans were also built something like that. That’s true in a very rough way, but there are lots of differences in the specifics, and knowing the right anatomy then leads to the construction of more accurate theories of disease, which then can lead oh so gradually to the search for appropriate treatments.

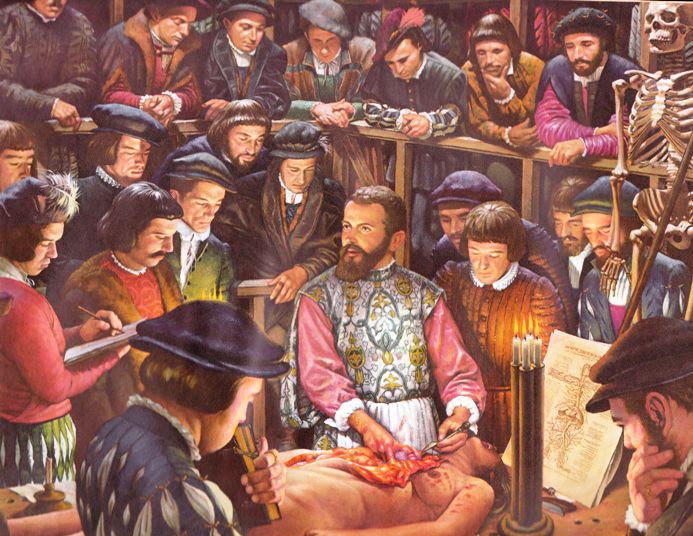

Consider this picture:

Around the same time, in Italy, there were a few people who dared to look for themselves, and one of these was the polymath—one who is brilliant in many ways—, Leonardo da Vinci. He did the next two pictures around 1501 and 1508, and they were based on observations made of actual dissections.

It was a characteristic of the Renaissance that in a few places people were pushing the edges of what had been taboo in most of Europe—and dissections were one of these frontiers. Interestingly, in spite of creating far more accurate pictures than what others had done previously, to some extent Leonardo still saw what he was told he would see by recognized authorities, and in certain crucial details that we’ll note later, he was wrong. For example, note the thin lines or vaguely perceived ducts between the top of the uterus—in spite of the male looking chest, this is a woman’s body— and the breast area? We now know they don’t exist, but they were said to back then, so he put them in. It’s kinda messy in there in real action of dissection, so you’re not always sure what you’re looking at. The belief that these channels existed were part and parcel of the mistaken theories of why women sometimes got fatal infections after having babies, and we’ll talk about that more in the next lecture.

About 50 years later another pioneer, Andreas Vesalius, did the first real anatomical research. He really looked, and looked carefully, refusing to rely on the traditional textbooks that had remained authoritative for a thousand years!

|

Part of the last few slides are instructive, because this process of moving towards science was not clear-cut. One could be progressive in this way and in another realm, still mired in traditional modes of thought. For example, the person who is considered the epitome of science and modernity, Isaac Newton, was certainly a pioneer in a number of ways, but he was also immersed deeply in alchemy (see below, and compare with notes about alchemy on another webpage on this site, about 1/3 way down)...

So, in the century following Columbus’ discovery of what he called a “new world”—it wasn’t new to the folks who lived here—there were many discoveries, of worlds out there in the heavens and a new view of worlds inside the human body. We’ll talk soon about another world within that world, the world that is revealed as folks began to examine the tissues microscopically! Most of the events of these talks, though, emerged mainly between around 1790 through 1880—that is, mainly in the 19th century. That’s when your great-great-grandparents were alive, so it’s not all that very long ago!

History is a vast field, but I enjoy focusing on one aspect at a time. In other lecture series I’ve spoken about the history of writing, the history of psychotherapy, and so forth. Being a physician, an M.D., I’m especially interested in the history of medicine, because for me it offers lessons for today about the complexity of events, how knowledge comes to be disseminated and used in culture.

Epistemology

This is a big word that refers to the philosophical endeavor to consider seriously the question of how we know what we know. There are a number of categories:- What can never be known by the human mind

- What may well be discovered someday

- What only a very few people know---but most don't

- What some think they know, but they're mistaken

- What some partly know, because they have begun to put together the clues correctly

- What some know, but most others don't yet believe them

- What ideas have now been accepted by the major authorities in a given field, but not yet much known by the general population

- What has become familiar knowledge to parts of the population but is still unfamiliar or even a bit shocking to many others

- What has become commonly accepted by almost everyone in a culture

. . .

There is also the interesting problem that arises when something in the last category, what might be called "common sense," is later overturned by further research, requiring a "paradigm shift," as the process above repeats itself!

Wonder

I am hoping during these lectures to not only inform you in an interesting way—such as through the use of this slide projector rather than overhead projector— but to evoke a bit of wonder, of a sense of “wow!” that will enrich your life. My goal is to make life more interesting to you. What you can do is to bring a sense of readiness to say wow! And that involves your noticing that you become habituated—you can do that with noise, with smells, with cherished others ( “I’ve grown accustomed to her face, the little songs she sings...”) So notice that you have this tendency to get used to things, and that tendency to take them for granted. In this case, consider that what we’ve gotten used to—our general level of health, our life expectancy, the idea that medicine nowadays seems to work for an increasing number of problems—has only really in our own lifetime become commonplace. For our grandparents, it has been estimated that the chances that they would actually be helped by going to the doctor rather than hurt more only changed around a century ago for the better.Life before the breakthroughs to be mentioned, before immunizations, anesthetics, antiseptic and later antibiotic treatment, nutritional supplements, and levels of sanitation we now take as basic — well, people got sick a lot more, there were horrible epidemics, lots of kids died, people suffered more, what we now call post-traumatic stress disorder—PTSD— was sort of everyday struggle for life a century or two ago for large numbers of people. We tend to hear more about the leisured and protected classes in literature, but the point is that we should recognize more vividly how new this all is.

Indeed, consider the following: If you were born around 1930 to 1940, your parents, assuming an average age of parenting to be around 25, were then born around 1905-1915; and their parents (your grandparents), born around, say, 1880-1890---which was just around when germ theory was becoming widely known. But their parents, your great-grandparents, may well have been born around 1855-1865, when anesthesia had just begun to be developed and germ theory was still unknown! (We'll be talking about this in the next lecture.) So the stories being presented are within the lifetime possibility. (I mean, some of my friends are now great-grandparents! Wow!)

I also consider that the skill (yes! it's a skill you can cultivate!) of wondering is related to keeping alive your curiosity and your "young-at-heart" spirit; and I think it's a core element in a liberal education.

Another general theme about history: Events are complex: that each event involves many other events—personal stories, backgrounds, cultural trends at the time, the development of parallel technology, and so forth. Because of this, I would suggest that history is "fractal" in nature. In the mid-1970s a new concept, fractals, was brought into mathematics and science, and it has come to explain many things. It notes that many natural things like a coastline, the weather, the branching of trees, have sub-parts and the sub-parts have sub-sub-parts and if you examine it carefully, it seems to never end in the fine divisions that come. (This has made for a whole new type of art---and if you Google "fractals" you can see innumerable amazing pictures based on this. In the 1960s and '70s, there was "op" art, and fractal art takes it in another direction!) So history is also fractal in the sense that you can keep examining the details and it opens up to a field of other questions and causes, and examining any of those open up to others, unendingly.

Microscopes and the Beginnings of Germ Theory

Speaking of fractals and the un-ending-ness of our horizons, Galileo and telescopes in general extended our horizons outward beyond the range of the stars we can see with the naked eye, the visible. Similarly, the invention of microscopes—about which we’ll spend a little time---have extended our horizons inward and downward into the realm of the tiny invisible.A few people have imagined tiny somethings, and over the centuries, even that they might cause disease, be a factor in contagion. None of these ideas caught on widely, though, because the mainstream thinking was that disease was an imbalance in the system, and more specifically an imbalance of the four basic substances or humors in the body—blood, phlegm, black and yellow bile. So treatment involved bleeding, purging—that is, taking substances that caused vomiting and diarrhea, provoking salivation and sweating, exercise, and so forth.

Several approaches led to germ theory. In the early 19th century, the activity that has now come to be called epidemiology begain, noticing and recording which diseases happened how often and under which circumstances---really keeping the numbers and comparing them. But this way of thinking---so common today---was still unknown a few centuries ago. But first, how can people actually find the agents of contagion, and that was interestingly not that easy.

Let’s start with the microscope, or even before that, the whole idea of lenses, and combining lenses. The optics hadn’t really been worked out, the mathematics, the degrees of curve in a lens. Still, folks knew about curved glass magnifying: You can see that in a goldfish bowl. But if the curve is irregular or not just right, the effectiveness of magnification has to be wisely constructed. I’m not about to go too much into the nature of the microscope—more interesting is how this tool opened up a whole world, and the implications of that world took more than two centuries to be appreciated!

Telescopes and microscopes both emerged around the same time, as an outgrowth of the sub-technology of lens-grinding, which also made possible more workable spectacles. The curve of the glass needs to be steady and regular, and it’s not just any old curve. All this emerged around the time of Galileo, in the early 17th century, around 1609. People were figuring out how to grind and polish lenses and the optics, the mathematics of assessing the curve of the lens.

By around 1660, a few scientists in Europe—and remember, science was just beginning—were exploring not only the heavens, but also the micro-world. One was Robert Hooke whose compound microscope—two different lenses that worked in combination— was able to see things expanded around 40-50 times. Hooke was active in the newly founded Royal Society of Natural Philosophy or some such name... exploring these frontiers, and wrote about what he could see—like the legs of fleas!

Hooke’s microscopes are about 16 inches tall, and in the picture to the right you can see some size comparisons: Below the microscope are two ordinary microscope slide—about 3/4 inch wide and 2½ inches long; and to the left a relatively small gizmo that shows the relative size of Leeuwenhoek's microscope, to be described next..

In general, simple microscopes, like magnifying glasses, don't magnify all that much. But if you make that glass quite small and keep the curve tight, it actually magnifies more strongly than Hooke's compound two-lens microscopes!

As I said, this wasn’t the first microscope invented, but it was a variation that offered better resolution by a factor of ten than the microscopes then available!

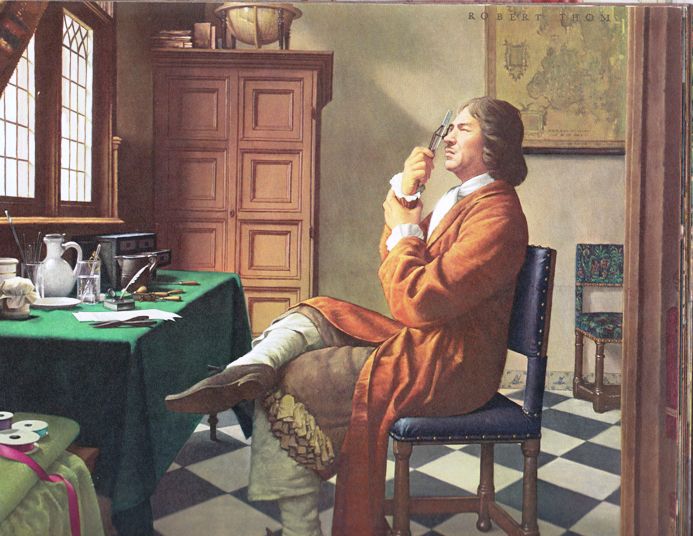

Leeuwenhoek made over 250 or more of them in his later years. These had one lens, really, a tiny globule of glass, finely ground so that it was clear and regular. When the curvature is very small, the magnification is correspondingly bigger—and van Leeuwenhoek’s were three to five times stronger than Hookes, allowing up to a magnification of 200 times—enough to see one-celled animals—L. Called them animalcules— and even as moving dots, bacteria.

This was a time when lots of stuff was happening. Isaac Newton was talking about gravity. There was first Bubonic Plague in London, and then smallpox. Leeuwenhoek’s work was a few years later, around 1673. He observed a number of things over the next ten years in his letters, and some were controversial. First, note that L’s work didn’t get connected much to the idea that the little animalcules he described might have anything to do with disease.

But he did open up the micro world, writing about one-celled animals in a drop of standing water, the scum of his teeth, the way the living, squiggling things died if he had just drunk hot coffee, even the number of little animalcules in semen—though he was most modest about that, presenting that in a separate letter.

|

Leeuwenhoek was impressed with the sheer number of these tiny creatures: (Indeed, we’ll mention this again in the last lecture when I talk about dental plaque!)

So, to review: Microscopes opened up new scales of vision::

|

|

| He wrote, "There are more of these in my mouth than men in the whole kingdom." | Hooke's microscope could see perhaps the human egg; but Leeuwenhoek's could see ten times smaller, at the ten micrometer level. |

Now an interesting anecdote—and important for our appreciation of history, because I not infrequently wonder what breakthroughs are

Another interesting point: Because the practical implications weren’t immediately available, the nature of science at that point was incomplete. Someone said about PhD dissertations that one collects the research sitting in the dust on other library shelves and compiles it, adds a bit to it, and it ends up sitting on the dust in a library. Alas! Because hardly anyone ever heard of Leeuwenhoek’s work for over two hundred years until it was re-discovered and its significance re-appreciated.

So a lesson of history that applies in many fields is that an idea requires marketing. This process of building knowledge isn't simple. If Professor X makes a discovery, and Dr. Y discovers something else that might build on or begin to make more sense of Professor X's findings, how does Dr. Y find out about what Professor X did? It's complicated in part by the possibility that Professor X may live in another country, perhaps even speak another language. Thus, here's rough listing of some of the elements in an infrastructure of knowledge dissemination:

- Knowing how to read and write, and there having finally been a development of the infrastructure also of materials to write on---e.g., parchment, papyrus, paper---and various writing implements and inks

- Arrangements for travel, formats for letters, a beginning postal service

- A potential for wider dissemination because of printing, the beginnings of journals, multiple copies of books, newsletters

- The establishment of libraries and the possibility of copying or lending from those collections

- Easing the difficulty of transportation, allowing for meetings and discussions in person, international conferences, later, tele-conferencing

- Computers, the Internet, E-mail, Websites, Search Engines (such as Google)

Knowledge-building, then, is not a simple process! It involves not just the problems in making a discovery, but also getting the word out, persuading others, having the new ideas make an impact. Bare knowledge isn’t always what gets credit. There’s also the challenge of writing it up, and getting that translated into the majority language of science—which at times during the histories that we’ll be considering did not happen; then there’s getting published, and getting enough people excited about the implications of what you’ve learned so that it gets used—and this also often did not happen! A comedian noted that the guy who invented the wheel was no slouch, but the one who really was able to capitalize on the invention was the fellow who realized that you needed four of them to really make a useful wagon! Also, this wheel technology was even more complex: There was also a need to invent and refine sub-technologies: the axle, the use of lubrication, the invention of springs, and other things that went into the evolution of the carriage. This happened also with the evolution of the microscope and other tools in this history of medicine.

The 1700s – the 18th century, saw not only the American revolution, but also an advance in microscope technology—but not much.

| This in 1735 is still |

pretty big, compared to microscope slide |

1744 version. |

More about microscopes and technology on a supplementary webpage.

Summary

The microscope lays the foundation, and with this history, a few of the themes to be addressed in the following lectures. The idea that germs exist is only a part of the story, though. It took the coordination of several ideas that still needed to be worked out, ranging from the question of how life arises to the problem of the nature of infection and disease. These will be addressed in the next lecture.I .